BYON- Bring Your Own Number (2024)

If you want to submit a number to be featured on the BYON's project YouTube channel , please use this Google Form

About the Piece

BYON (or Bring Your Own Number) is an audiovisual piece with 1,000,001 movements. Little by little, these movements are being discovered, and whoever discovers a movement has the right to name it.

Each movement consists of stereo music and video, both generated by computer code and based only on a numeric input. The music and video are unique for each number, ranging from (and including) 0 to 1,000,000. Yet, despite the seemingly random nature of the process, the sounds and visuals are not entirely chaotic. Through careful planning, coherence emerges across the movements, even in the absence of direct human control, making BYON an exploration of compromise with the unknown and an exercise in acceptance.

BYON’s discovered numbers are posted on the project’s YouTube channel on a first-come first-serve basis. After the Google form was open to the public, BYON received 39 submissions in the first 10 days, the vast majority of which found out about the project through YouTube recommendations. The submission numbers suddenly fell after that, leaving me to believe that YouTube’s algorithm stopped recommending BYON videos.

BYON’s first live performance was on November 13, 2024, at Yes We Cannibal in Baton Rouge, Louisiana. The project draws inspiration from the 2024 total solar eclipse in North America, the YouTube channels Numberphile and Stand-up Maths, the video game Binding of Isaac, and Jupiter’s rings discovered in 1979 by the passing Voyager 1 spacecraft.

About the Code

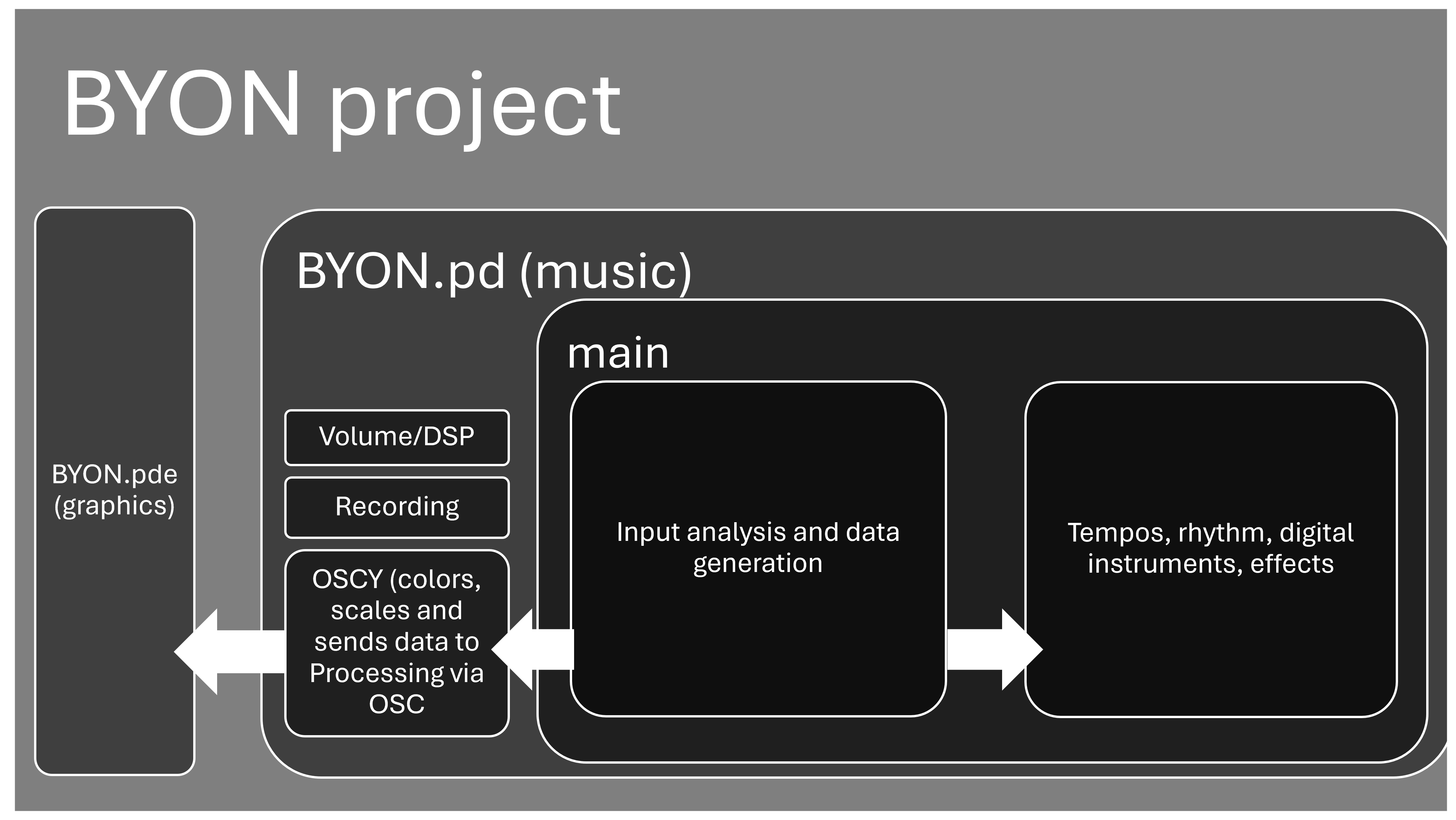

The diagram at the end of this page represents how BYON works. The project is coded entirely on Pure Data (using extensions present on Purr Data, where it was written) for the musical and data processing part, and Processing/Java for the graphics part.The diagram at the end of this page represents how BYON works. The project is coded entirely on Pure Data (using extensions present on Purr Data, where it was written) for the musical and data processing part, and Processing/Java for the graphics part.

The immediate code on BYON.pd only handles the audio recording, volume, and infrastructure that allows for input numbers without the Processing sketch’s GUI. Still, inside BYON.pd you will find the abstraction [main] and the subpatch [OSCY]. OSCY handles the colors in the graphics and sends scaled and relevant music generating data that will be used to generate graphics as well in real time. As for the abstraction [main], it handles the music generating processes.

Once the user input number, or the movement number, is sent to main.pd, it goes through a code which detects if said number is 0, 1, or a prime number (which are considered special numbers). If the number is 0, it gives origin to a specific movement called Cagean , which is exactly 4 minutes and 33 seconds in length if the title and outro page are disregarded. Since the number 1 is not a prime or a composite number, but is uniquely classified as a “unit”, it triggers a special abstraction called [unity] (found inside of [orchestry]), which sends specific rhythmic information to five note-based instruments, and activates all of the noise-based instruments for a one section long piece.

Prime number movements will follow special rules regarding its color and form, but they would use the same instruments as any composite number. The generation of prime-based music happens inside of the abstraction [primary]. Composite numbers will have all their prime factors extracted through the abstraction [factory] to be used as data for music and visual generation.

After being sent to [main], composite numbers go through a code that establishes how many sections a piece will have and if there will be transitions between these sections, as opposed to sudden changes. [main] also creates 20 seeded random variables that will be scaled and used throughout the piece when necessary.

[formality], a subpatch inside [main], will then establish form and transitions, triggering the beginning and end of sections and turning instruments on and off in real time. During any given section, 3 to 7 instruments will be sounding at the same time.

All the information generated directly inside [main] goes to [khrony], which controls the tempo and rhythmic figures used by the instruments that depend on this information. If [main] establishes that 3 instruments will be performing, for example, it sends this information to [khrony], which will either trigger a textural patch directly or control the temporal aspects of other instruments.

There are 13 patches and code sections used for tempo and rhythm control inside [khrony]. They can be one of the following 7 types:

- A code that converts all the prime factors of a number to binary, and then outputs the resulting digits in a loop at a given speed. A bang is sent every time a 1 is output.

- A code that converts the input number to binary, and then outputs the resulting digits in a loop at a given speed. A bang is sent every time a 1 is output.

- Temporizers: a [temporizer] chooses between 15 different rhythmic figures that fit into a tactus in real time, based on a probability curve with 15 points. For more details, please refer to my temporizer documentation.

- Tattarrattats: named after the longest palindrome in the English language, coined by Joyce in his novel Ulysses to represent a knock in the door. Each of the two tattarrattats generate palindrome, or non-retrogradable rhythms.

- Eucledeans: generate Euclidean rhythms. There are two of them in [khrony].

- Bakery: generates rhythms based on the Lazy Caterer’s sequence .

- Ostinato: Generates ostinato.

The rhythmic information is sent in real time to [orchestry], where it is routed to different instruments every time. From the eighteen instruments, ten of them are more noise based, although some can emit clean tones depending on the variables input. The other six instruments will generate notes either by completely digital audio synthesis or by using samples.

The signal from the instruments is then sent to [fxy], where reverb, delay, and granular synthesis may be applied. Additionally, compression and panning are always added to each instrument.

The last two parts of the code are the subpatches [coda] and [postit]. [coda] is only triggered about half of the times, creating a fadeout effect at the end of the movement instead of allowing it to stop abruptly. [postit] implements a final general reverb and clips any signal that might get out of control.

Some of the data generated in the initial stages of this process will be sent to a Processing Sketch via OSC, generating graphics in real time. Each instrument controls one specific graphical object.